We’ve all heard it. In the face of COVID and climate-change denial and antivax nonsense, progressives, liberals, and sane conservatives often respond: “Trust science!”

It’s an understandable response, and far more reasonable than some of the crackpot conspiracy theories ricocheting around the intertubes. But “trust science” glosses over a lot of complexities and uncomfortable history—history that we shouldn’t ignore.

Science is a process, not a product. The scientific method seeks to discern facts by testing hypotheses in as objective a manner as possible, eliminating or controlling for any confounding factors that might skew the results. It’s a process that has led to astounding things, from space telescopes that can see back to the dawn of the galaxy to the open-heart surgery that added 20 years to my father’s life.

But that process is run by humans—flawed, imperfect creatures even on our best days. Humans, try as we might to be objective and impartial, have prejudices and preconceptions. We can be influenced, consciously or unconsciously, by career pressures, funding issues and all sorts of things. Scientists possess no miraculous immunity to the frailties of the human personality, and that sometimes leads science astray.

Eugenics, now generally regarded with horror, was once widely accepted. Much of it was based on so-called “intelligence tests,” as the National Education Association explains:

In his 1923 book, A Study of American Intelligence, psychologist and eugenicist Carl Brigham wrote that African-Americans were on the low end of the racial, ethnic, and/or cultural spectrum. Testing, he believed, showed the superiority of “the Nordic race group” and warned of the “promiscuous intermingling” of new immigrants in the American gene pool.

In his book, Not Fit for Our Society, Peter Schrag adds, “Henry H. Goddard, one of the American pioneers of intelligence testing, found that 40 percent of Ellis Island immigrants were feebleminded and that 60 percent of Jews there ‘classify as morons.’”

From a 21st Century perspective, the cultural biases in some of these tests seem so obvious as to be laughable. For example, they were conducted in English, but typically didn’t attempt to account for the fact that many of the immigrants tested were just starting to learn English as their second language. A century ago, the researchers either couldn’t see the bias or chose not to.

And this was mainstream stuff, pursued by scientists from Harvard and Princeton and published by the National Academy of Sciences, among others. It became the basis for discriminatory immigration laws and even forced sterilization.

For another kind of bias, consider the National Institute on Drug Abuse, created at the tail end of the Nixon administration to continue and expand upon the work of the Drug Abuse Warning Network and National Household Survey on Drug Abuse. NIDA, with a budget of nearly $1.5 billion last year and which describes itself as the “largest supporter of the world’s research on drug use and addiction,” has never let go of the guiding principle that drug use is a problem to be fixed, rather than a facet of human behavior with positive and negative aspects that need to be fully understood. NIDA’s FAQ page makes this abundantly clear, with sections on addiction, withdrawals and the costs of drug use to society but zero mention of non-harmful aspects of substance use.

“NIDA is political … NIDA’s mission is to frighten the American public about drugs.” says Columbia University researcher Dr. Carl Hart, author of Drug Use for Grown Ups. Hart, who’s done plenty of NIDA-funded research, isn’t referring to partisan politics but to the broader political aspect of the war on drugs: For decades the US government has pursued a policy of trying to eliminate use of unauthorized substances, and an agency within that government can’t stray too far from the official line.

UCSF researcher Dr. Donald Abrams experienced this when he attempted to study medical use of cannabis by HIV/AIDS patients in the 1990s. In a series of stories I reported for AIDS Treatment Newsand other publications when California was legalizing medical cannabis, he called the succession of rejections from NIDA and other federal authorities that needed to okay research on a Schedule I drug as “an endless labyrinth of closed doors. … The science is barely surviving the politics.” In another conversation he recalled then-NIDA Director Alan Leshner telling him, “We’re the National Institute on Drug Abuse, not the National Institute for Drug Abuse.”

Abrams only got his study approved after reconfiguring his proposal to emphasize safety questions: Were HIV patients hurting themselves by using cannabis? The feds were much more open to studying dangers of a banned substance than its potential benefits.

None of this means everything NIDA does is bad—far from it, and some attitudes may have softened over the years. Eventually, with NIDA’S blessing, Abrams did publish a study showing that cannabis safely and effectively relieved painful peripheral neuropathy in HIV patients. But Leshner’s remark described the mentality that guides the institute’s priorities.

Yale University epidemiologist Dr. Gregg Gonsalves says he wants “more people to build their own personal connection to data—understand basic concepts. This can start in schools but can also be public education campaigns.”

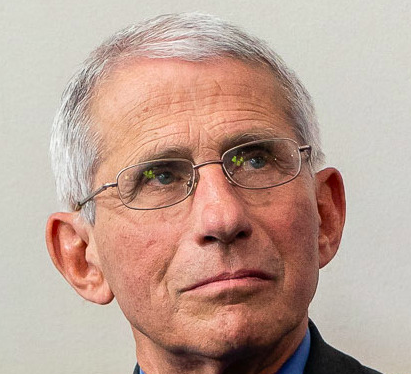

Gonsalves has been down that road before as a member of the ACT UP New York Treatment and Data Committee and co-founder of the Treatment Action Group. He was one of the AIDS activists who, seeing a scientific establishment blissfully unconscious of the needs of people with HIV and unwilling to listen to them, dug in, learned the science, and successfully pressured Dr. Anthony Fauci and the Food and Drug Administration to deal with them.

They didn’t disdain the scientific method, but learned it, engaged with it, and forced the research establishment to confront its own dysfunctional habits and biases.

It was hard work, but it changed how clinical drug trials are done and how medicines get approved. AIDS activists learned to tell the difference between what was useful in the traditional ways of doing things and what was merely the residue of old habits and prejudices. That’s a much grittier, more complex process than comparing Dr. Fauci to Joseph Mengele and dismissing everything he says.

Hart and Gonsalves agree that bias is real, and that confronting it requires an understanding of how science works and how to look at data—an understanding that too many Americans lack. “This is what I’ve been emphasizing to my students for decades,” Hart says. “Never trust the scientists, always look at the data. You hope that people have some ability to evaluate the data.”

Gonsalves puts it bluntly: “The point is that you have to get involved. You need to know your shit, need to be humble about your knowledge and open to learn.” That humility is central, and strikingly absent from most of the social media rantings of antivaxxers and climate deniers. To engage with science and data, you need to be open to your own biases as well as those of the people you’re critiquing. Cognitive bias is real and affects us all.

One reason Americans have so much trouble wrestling with scientific issues like COVID and climate change is that, to be blunt, we’re largely a nation of scientific illiterates. As Hart notes, too many struggle with elementary concepts like the fact that correlation or association doesn’t equal causation. That two things happen together, or even one after the other, proves nothing about what caused what. If I wake up tomorrow, make a pot of coffee, and a few minutes later it starts to rain, that does not prove that making coffee causes rain.

Similarly, the fact that teen marijuana use is “associated” with poorer grades, for example, doesn’t tell you whether weed causes poor grades. “There can be all kinds of other variables that cause that, and our job is to look for those variables,” Hart says. “Most people are fooled by that simple conversation.” It could just as easily be that doing poorly in school causes kids to distract themselves with weed or other substances, or different, unknown factors could be driving both outcomes.

Hart calls lack of scientific literacy “a huge problem.”

Other basic concepts, like statistical significance—think, for example, of a political poll’s margin of error—get ignored or misrepresented in the daily news and social media discourse on scientific matters. And that error isn’t limited to discussions of science.

How often have you seen a poll with a one- or two-point margin for one candidate reported as saying that candidate is leading? That’s wrong. Political polls almost always have a margin of error of at least three percentage points, meaning each candidate’s real number might be three points higher or lower than the reported figure, so a two-point lead is meaningless. Whether the realm is politics or science, discussion that glosses over these critical details makes us dumber.

There’s no quick fix for this. “We need a foundation of scientific and mathematical education in the US that we simply don’t have right now,” Gonsalves says. “Not a reason not to work on this, but we have to deal with the fundamental weaknesses in our educational system too.”

Still, there are things all of us can do. Hart urges people to look at the expertise and credentials of the person speaking or writing when evaluating what they say. That’s not a perfect guide—there are a few credentialed crackpots out there (just Google “Peter Duesberg”)—but it’s a start. Try not to get medical information from pundits/poll analyzers-turned-armchair-epidemiologists.

And, Gonsalves notes, “Detecting bullshit can be taught. Carl Bergstrom at U-Washington has a whole class and book on it. Carl Sagan had some tips too. And denialism, from AIDS to vaccines, has some very specific rhetorical features, which you can be on the lookout for.”

In the short term, arming oneself with these basic tools can help a lot, even as we try to sort out the longer-term task of building scientific literacy among the American public. Still, there will inevitably be times when non-scientists hear conflicting views on a scientific issue and feel lost at sea. What then?

Here’s one approach, which Hart agrees could be useful: It’s what I call the “What If I’m Wrong Test.” Look at the competing ideas, particularly the one you’re most inclined to agree with, and ask yourself, “What if I’m wrong?” That won’t tell you which side is right, but it might help guide you to the least dangerous alternative.

For example, let’s apply this test to climate change. Broadly, views on how the world should approach climate change divide into two general camps: 1) Climate change is a crisis that demands urgent action to cut greenhouse gas emissions, or 2) Climate change is overblown and we don’t need to drastically rethink our energy system.

Let’s say I believe Number one (for the record, I do). What if I’m wrong? What if society does what I want, and it turns out not to have been necessary? We’ll spend a bunch of money we didn’t have to. Needless economic dislocations will occur as fossil fuel industries shrink and low-or zero-carbon alternatives take their place. Old jobs and businesses will go away and new ones will grow, posing serious challenges for some families and communities.

Those are significant negatives.

On the other hand, air pollution—most of which comes from burning petrochemicals—will be massively reduced, improving health and cutting medical costs. And the lifespan of those finite reserves of coal, oil and gas will have been greatly extended. Not a perfect situation, all in all, but manageable.

Now let’s say I believe Number Two, and again society follows my wishes. What if we’re wrong? Coastal cities get wiped out. Out of control fires, floods and hurricanes kill millions and leave hundreds of millions homeless. Shifting rainfall patterns and droughts destroy crops and fuel mass migrations of starving populations, leading to war and violence as countries with their own struggles try to keep out the desperate masses.

We’ll save some money by not converting to clean energy but will spend many times more coping with the ensuing economic and humanitarian disaster.

Even if we’re really not sure which proposition is correct, the choice is now much clearer.

In the short term, the news media could help—but they probably won’t. They could stop chasing clicks with sensationalized headlines that distort and oversimplify. They could take the time to explain basic concepts like statistical significance and cognitive bias. And they could read the damn studies they report on.

That last one is no joke. When I worked at the Marijuana Policy Project from 2001-2009, I was continually appalled by how many news stories about scientific studies were written by reporters who never read the actual study, just the press release. Fun fact: A press release from the institution that conducted or published a study almost never highlights the study’s weaknesses. Unfortunately, we can’t wait for the media to reform or US science education to improve. In the short term, at least, it’s on us. It’s our responsibility to, as Gonsalves said, “know your shit.”